Why is latency an important factor in networking and internet speed?

Latency is a critical factor in networking and internet speed because it directly affects how quickly data travels across a network and how responsive applications and services feel to users. Understanding latency involves exploring its causes, impacts, and ways to mitigate it for better network performance.

What is Latency?

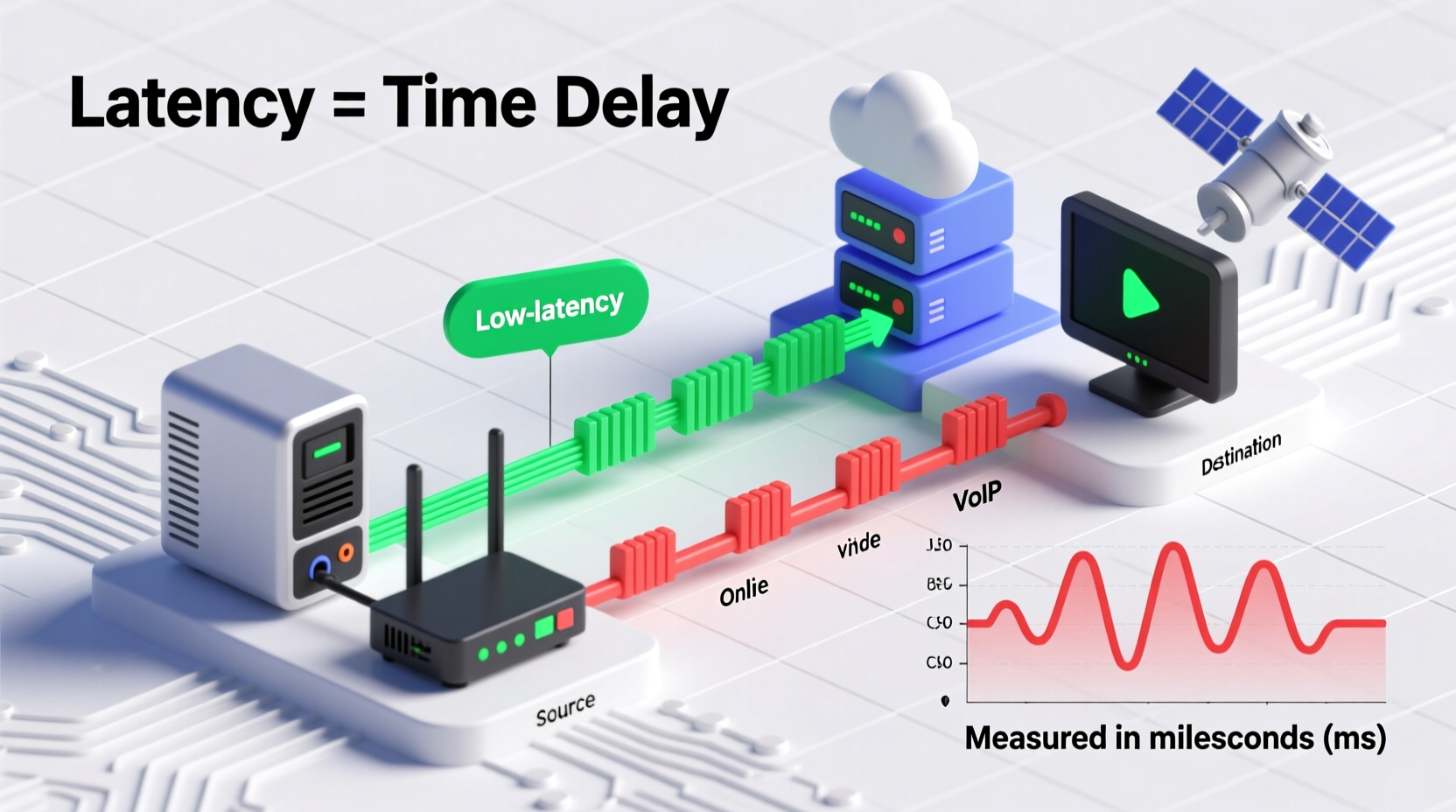

Latency networking is the time delay between a user action and the response from the network. More technically, it is the amount of time it takes for a data packet to travel from the source device to its destination across a network, often measured in milliseconds (ms). In internet and networking contexts, low latency means faster communication and more seamless user experiences, while high latency causes noticeable delays.

Causes of Latency

Causes of Latency

Several factors influence network latency:

- Physical Distance: The farther data must travel, the longer latency becomes. For example, sending data from your local device to a distant server on another continent increases latency due to the travel time along cables or wireless links. This propagation delay is a fundamental cause of latency.

- Transmission Medium: Different transmission media have different speeds. Fiber optic cables provide faster speeds and lower latency compared to copper wires or wireless connections. Switching between media types adds extra delay.

- Number of Network Hops: Data packets often pass through multiple devices like routers, switches, and firewalls before reaching their destination. Each device processes packets, adding milliseconds of delay, which accumulates with each hop.

- Network Congestion and Traffic Volume: When many devices compete for bandwidth on the same network, congestion occurs. This causes data to queue at devices, increasing queuing delay and latency.

- Server Performance: Sometimes latency arises not just from network travel but from slow responses by servers handling the data requests. This includes slow database queries, heavy computational loads, or resource constraints at the server end.

- Protocol Overhead: Protocols like TCP require several communication steps before actual data transfer begins, adding to latency. UDP is faster but less reliable due to fewer checks.

- Hardware Quality and Configuration: Outdated or poorly configured network hardware and faulty DNS servers can increase packet processing times and routing delays.

- Environmental and External Factors: Weather conditions or “acts of God” can also disrupt signals, especially in wireless or satellite networks, adding to latency unpredictably.

Why Latency Matters

- User Experience: High latency causes noticeable lag in applications such as video calls, online gaming, and live streaming. Delays disrupt communication quality and user satisfaction.

- Application Performance: Certain applications, particularly real-time ones, depend heavily on low latency. In financial trading, healthcare, and autonomous vehicles, even milliseconds of delay can have significant consequences.

- Network Efficiency: High latency can reduce the throughput of networks, affecting overall speed and performance.

Mitigating Latency

- Geographical Server Placement: Placing servers closer to users reduces the physical distance data must travel, cutting latency.

- Using Faster Transmission Media: Upgrading to fiber optics or better wireless standards can improve speed.

- Reducing Number of Hops: Optimizing routing paths and network design minimizes the number of intermediate devices data must pass through.

- Load Balancing and Edge Computing: Distributing workloads and deploying edge servers near users reduces congestion and response times.

- Optimizing Protocols and Software: Using more efficient protocols and lightweight web designs reduces data size and exchanges, thereby improving latency.

- Hardware Upgrades: Investing in modern, high-performance routers and switches improves packet processing speed.

Conclusion

Latency is a fundamental measure of networking responsiveness. It is influenced by physical distance, network infrastructure, traffic, and server capabilities. Reducing latency improves internet speed and the quality of online experiences, especially for latency-sensitive applications. Network designers and service providers continually implement strategies like edge computing and better hardware to combat latency for smoother, faster connectivity.

This understanding allows both users and professionals to appreciate why latency matters and how addressing its causes leads to better networking and internet performance.